Blog 0.2: Introduction to PySpark

Introduction to PySpark

PySpark is a Python API for Spark released by the Apache Spark community to support Python with spark. Apache Spark is a popular open source framework that ensures data processing in a reliable speed and supports various languages like Scala, Python, Java, and R.

In this blog, I will demonstrate the basic understanding of PySpark using Jupyter Notebook. PySpark is the collaboration of Apache Spark and Python.

Reason for Selecting Pyhton to work with SPARK

- Python provides simple and comprehensive API

- Python is a simple and easy to learn in comparison with other programming languages

- Python is backed by huge and active community

- Python has an abundant Libraries to perform data visualization and data manipulations like NumPy, Scikit-learn, seaborn, etc.

Spark Session

Spark session is an unified entry point of a SPARK application that allows to interact with various spark’s functionality. If someone is familiar with terms like spark context, hive context, SQL context, now all of these context is enscapulated with spark session.

Following lines of code shows the steps for creating a spark session.

import findspark

findspark.find()

output:

‘C:\Users\limbu\spark-3.0.1-bin-hadoop3.2’

This determines the installed location for Pyspark directory.

Now, we can create a SPARK session using the jupyter notebook.

from pyspakr.sql.functions import SparkSession

spark= SparkSession\

.builder\

.getOrCreate()\

.appName("findInfo")

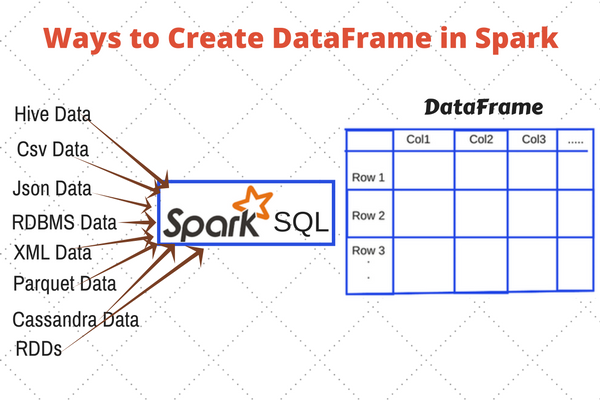

DataFrames in Spark

DataFrames are designed for processing large collection of structured or semi-structured data. For optimization purpose, DataFrame in are organized under named columns which are supported by wide range of data format and sources. It has API support for different languages like Python, R, Scala, and Java.

staticDataFrame= spark.read.format("csv")\

.option("header","true")\

.option("inferSchema","true")\

.load("C:/Users/spark-3.0.1-bin-hadoop3.2/data/retail-data/by-day/*.csv")"

In the above code, several ‘csv’ files are read from a directory which are on csv format.

- Header option determines the first row as Header of the file which is set to True

- InferSchema option determines the data type of each column. Setting InferSchema to true will set exactly the same data type of the original file

NOTE: Leaving the InferSchema to default (False) will create a DataFrame with String data type for all columns. This is particularly useful when presenting the format of the DataFrame due to processing time for just single data type (string)

SCHEMA

A Schema represents the structure of your data (which together create a dataset in Spark SQL). It can be implicit (and inferred at runtime) or explicit (and known at compile time).

staticDataFrame.printSchema

output:

<bound method DataFrame.printSchema of DataFrame[InvoiceNo: string, StockCode: string,

Description: string, Quantity: int, InvoiceDate: string, UnitPrice: double, CustomerID: double,

Country: string]>

In the next blog, I will demonstrate more functions of pyspark.